Minu Jeong

Benchmark here can be vary from various versions and situations. Just look at the numbers as proof of concept, not as budget reference for existing projects.

Heads Up

Better/Faster Python

Numpy, PyCuda, Cupy, Tensorflow

Optimize

Compute Shader

Q&A

Interpreted language (No compile)

Faster iteration

Compatibility

Easy

Less mistakes for less trained coders

Slow

Hardware accessibiilty

Security (when client side)

Interpretation

Interpreter lock

No primitives

No forced typing

Implicit processing

Accept it, let your application slow

Solve with hardwares (better CPU, more servers, etc)

Use other languages

C/C++/C# is fast, C# is even easy, too

Java is faster, although with it's infamous VM

Even JavaScript is faster, with VM and duck typing

Ruby could be faster in certain situations

...

Write efficient code, Usually it will be enough

Use faster Python: Cython, Stackless, PyPy, etc

Use C/C++ wrapping: Numpy or custom

...

Use GPU

Efficient code? What does it means?

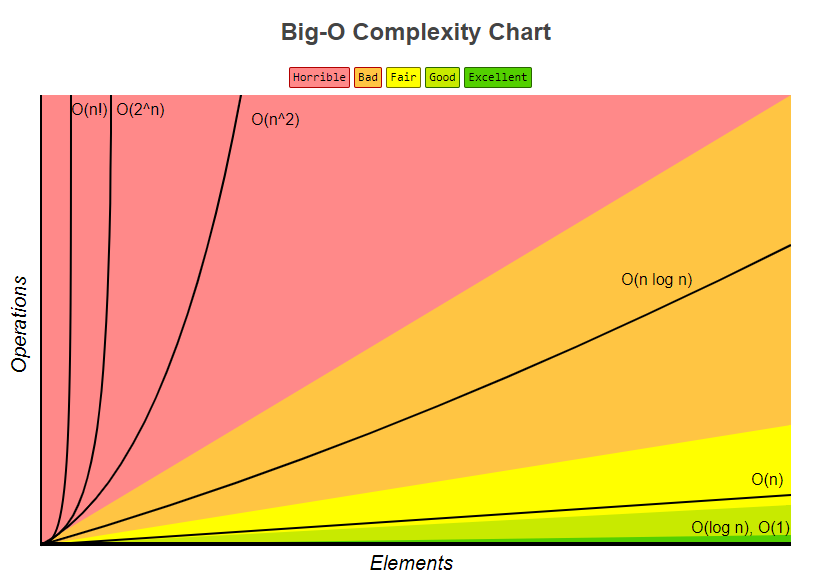

Use efficient algorithm. Mind the O-complexity

Generally good for other languages are usually good for Python, too.

Use Pythonic code

http://bigocheatsheet.com/

# cache

import timeit

import random

def non_cached():

count = 0

for _ in range(50):

a = [None] * random.randrange(1, 50)

if len(a) > 15:

count += len(a)

return count

def cached():

count = 0

for _ in range(50):

a = [None] * random.randrange(1, 50)

len_a = len(a)

if len_a > 15:

count += len_a

return count

print(

timeit.timeit(non_cached, number=10000),

timeit.timeit(cached, number=10000)

)

non_cached: 0.6148

cached: 0.5750

# string concat

import timeit

user = "minu"

def concatenated():

return "Hello, " + user + "!"

def formatted():

return f"Hello, {user}!"

print(

timeit.timeit(concatenated),

timeit.timeit(formatted)

)

concat: 0.1617

format: 0.1458

# builtin

from functools import partial

import timeit

a = [x for x in range(100000)]

def pysum_list(t):

sum_x = 0

for x in t:

sum_x += x

return x

print(

timeit.timeit(partial(pysum_list, a), number=1000),

timeit.timeit(partial(sum, a), number=1000)

)

custom: 3.8897

builtin: 1.3365

# function call overhead

import timeit

summed_1 = 0

summed_2 = 0

def calc1(item):

global summed_1

summed_1 += item

def calc2(container):

global summed_2

for i in container:

summed_2 += i

print(

timeit.timeit(

lambda: [calc1(i) for i in range(10)],

number=1000000

),

timeit.timeit(

lambda: calc2([i for i in range(10)]),

number=1000000

),

)

calc1: 1.9375

calc2: 1.3911

Cython

Stackless Python

PyPy

Different from C-Python

Compile Python to C

Lift limitation of interpreted language

Need to be compiled

Can use pure Python

Decouples Python code from C stack

Allow you to use green threads instead of system threads

Solution for massive concurrency

Picklable tasklets

Awaits IO system call, network packets, etc with only one tasklet

Turing machine and real world

# without stackless python

def ping():

print("ping")

pong()

def pong():

print("pong")

ping()

ping()

https://www.grant-olson.net/

| Frame | Stack |

|---|---|

| 1 | ping -> |

| 2 | ping -> pong -> |

| 3 | ping -> pong -> ping -> |

| 4 | ping -> pong -> ping -> pong -> |

| 5 | ping -> pong -> ping -> pong -> ping -> |

| 6 | ping -> pong -> ping -> pong -> ping -> pong -> |

| ... | ... |

| ... | STACK OVERFLOW! |

# with stackless python

import stackless

ping_channel = stackless.channel()

pong_channel = stackless.channel()

def ping():

while ping_channel.receive():

print("ping")

pong_channel.send('from ping')

def pong():

while pong_channel.receive():

print("pong")

ping_channel.send('from pong')

stackless.tasklet(ping)()

stackless.tasklet(pong)()

stackless.tasklet(ping_channel.send)('start')

stackless.run()

https://www.grant-olson.net/

| Frame | Stack |

|---|---|

| 1 | ping -> |

| 2 | pong -> |

| 3 | ping -> |

| 4 | pong -> |

| 5 | ping -> |

| 6 | pong -> |

| ... | ... |

| ... | No stack overflow |

Python implemented in Python

Faster than C-Python

Not as fast as Cython, but compile not required

Stackless included for micro-threads

Better memory efficiency

Still slower than C/C++/C#

Compatibility issue with libraries

Compatibility issue with DCC tools

Multi dimensional array crunching

Integrate C/C++/Fortran

Intel MKL or OpenBLAS

Still only using CPU

# numpy random

# easier to read, much faster

import random

import timeit

import numpy as np

def numpyrand():

return np.random.uniform(0.0, 1.0, size=(100, 100))

def pyrand():

result = []

for x in range(100):

result.append([])

for y in range(100):

result[x].append(random.random())

return result

print(

timeit.timeit(numpyrand, number=1000),

timeit.timeit(pyrand, number=1000)

)

# numpy with Intel MKL

numpy random: 0.0855

python random: 1.5828

Scipy: Python for science

Pandas: Python data analysis library on top of numpy

Numba: LLVM(Clang) compiled python with GPU

Tiny numpy: Header only numpy

Python integrated with CUDA

CUDA is GPGPU framework provided by NVIDIA

CUDA code compile on runtime

Compatible with Numpy

Provides basic calculation APIs, as well as runtime cuda compile

# PyCuda

from functools import partial

import timeit

import numpy as np

import pycuda.autoinit

from pycuda.curandom import rand

def numpyrand(size):

return np.random.uniform(0.0, 1.0, size=size)

def pycudarand(size):

return rand(size, dtype=np.float32)

print(

timeit.timeit(partial(numpyrand, (100, 100)), number=1),

timeit.timeit(partial(numpyrand, (1000, 1000)), number=1),

timeit.timeit(partial(numpyrand, (10000, 10000)), number=1),

timeit.timeit(partial(numpyrand, (10000, 100000)), number=1)

)

print(

timeit.timeit(partial(pycudarand, (100, 100)), number=1),

timeit.timeit(partial(pycudarand, (1000, 1000)), number=1),

timeit.timeit(partial(pycudarand, (10000, 10000)), number=1),

timeit.timeit(partial(pycudarand, (10000, 100000)), number=1),

)

# Numpy performance linearly drops by size of calculation

# because Numpy is using CPU

(100, 100) numpy: 0.0004

(1000, 1000) numpy: 0.0112

(10000, 10000) numpy: 1.1485

(10000, 100000) numpy: 11.066

# While pure Python drops unutilizable already at (10000, 10000)

(10000, 10000) python: 16.5806

# PyCuda performance is slower at first, because of PIPE construction,

# but doesn't drop performance much even if calculation

# exponentially increases while increasing

# from 10,000 randoms to 1,000,000,000 randoms

(100, 100) pycuda: 1.2710

(1000, 1000) pycuda: 1.2507

(10000, 10000) pycuda: 1.3872

(10000, 100000) pycuda: 1.5752

Drop-in Numpy replacement

Not all Numpy APIs are compatible yet

Support CUDA

Provided as subset of Chainer

# Cupy uses almost same API with Numpy,

# while calculation is moved to GPU

from functools import partial

import timeit

import numpy as np

import cupy as cp

cp.random.uniform(0.0, 1.0, size=(1, 1))

def numpyrand(size):

return np.random.uniform(0.0, 1.0, size=size)

def cupyrand(size):

return cp.random.uniform(0.0, 1.0, size=size)

print(

timeit.timeit(partial(numpyrand, (100, 100)), number=1),

timeit.timeit(partial(numpyrand, (10000, 10000)), number=1)

)

print(

timeit.timeit(partial(cupyrand, (100, 100)), number=1),

timeit.timeit(partial(cupyrand, (10000, 10000)), number=1)

)

# numpy

(100, 100): 0.0001

(10000, 10000): 1.1305

# cupy

(100, 100): 0.0003

(10000, 10000): 0.0755

Machine Learning framework

Maintained by Google

Well documented, many references

Calculation should be 'compiled' before computed

Implements own way of number crunching

Provides a lot of utilities: distribute, visualize, etc

# tensorflow-gpu

from functools import partial

import timeit

import tensorflow as tf

def tfrandom(size):

return tf.random_uniform(size, 0.0, 1.0)

with tf.Session() as session:

c1 = partial(session.run, tfrandom((10, 10)))

c2 = partial(session.run, tfrandom((100, 100)))

c3 = partial(session.run, tfrandom((1000, 1000)))

c4 = partial(session.run, tfrandom((100, 100)))

c5 = partial(session.run, tfrandom((100, 100)))

t1 = timeit.timeit(c1, number=1)

t2 = timeit.timeit(c2, number=1)

t3 = timeit.timeit(c3, number=1)

t4 = timeit.timeit(c4, number=1)

t5 = timeit.timeit(c5, number=100)

print(f"{t1:.4}, {t2:.4}, {t3:.4}, {t4:.4}, {t5:.4}")

# size: cost

# possibly contains some caching

(10, 10): 0.0096

(100, 100): 0.0054

(1000, 1000): 0.0089

# 100 times calculation doesn't consume 100 more time

# next calculation is being assigned to empty processors

(100, 100), '100 times': 0.0419

Parallelize

Context switching

Instructions

Grid, Block, Thread, Warp

Profiling

This is why Python code runs faster than C++ in certain manner

Difference between CPU and GPU

CUDA code take advantage over parallel computing

Each unit is slower, but there are many units

Bad at branching

Heterogenoeus computing: serial(CPU) and parallel(GPU)

# context switching

# host and device

import timeit

import cupy as cp

def a():

return cp.random.uniform(0.0, 1.0, (256, 256))

def b():

return cp.random.uniform(0.0, 1.0, (1000, 256, 256))

ta = timeit.timeit(a, number=10000)

tb = timeit.timeit(b, number=10)

print(ta, tb)

# normal: 1.0653

# less-switching: 0.0527

Same with Shader

Free SFU ops(swizzle, saturate, sin, etc)

SFU(special function unit) has it's own cap (~20%)

Avoid branching

Grid

Block

Thread

Warp

SFU

Contains multiple blocks

Number of threads and blocks should be specified on initialize

Global memory access

Contains multiple threads

Shared access memory

~64kb cache (>fermi)

Single instruction executor

Local memory access

Execution unit consists of 32 parallel threads in a block

Executes multiple(~32) threads together

Threads in a warp shares exactly the same code

Scheduler may execute multiple warps concurrently (>fermi)

Stands for special function unit

Executes transcendental instructions

sin, cos, 1/x, sqrt, saturate, etc

One instruction per thread, per clock

(A warp executes over 8 clocks)

Decoupled from dispatch unit: "FREE" calculation

Simply counting time is not accurate

Dynamic Parallelism (>Kepler)

Concurrency

Different by device, driver, situation, etc

NSight

When you write your own custom CUDA code

Aligned memory access

Shared Memory

Texture Fetch instead of Buffer when possible

Coalescing?

Global memory access (not local)

Coalesced access pattern

Misaligned access pattern

Striding access pattern

"Shared" among threads in a block

Can be used to avoid uncoalesced memory access

Avoid serialization, or will cause host sync

Another perspective of utilizing GPU

Use shader language to define computation

Nothing directly related to rendering

Common shader: Read vertex/face index/texture, write to frame buffers

Compute shaders: Read buffers, write to buffers

For technical artists, this could be more intuitive

# compute shader

import moderngl

import numpy as np

compute_shader_source = """

#version 430

layout (local_size_x=64) in;

layout (std430, binding=1) buffer outs_1

{

// 4 bytes

float xs[1];

};

layout (std430, binding=2) buffer outs_2

{

// 4 bytes

int ys[1];

};

void main()

{

// invocation index

uint i = gl_LocalInvocationIndex;

// 0.0 ~ 126.0

xs[i] = float(i) * 2.0;

// 0 ~ 63

ys[i] = int(i);

}

"""

# prepare and compile compute shader

context = moderngl.create_standalone_context(require=430)

compute_shader = context.compute_shader(compute_shader_source)

# prepare buffer

default_outs_buffer_1 = np.linspace(0.0, 10.0, 64).astype(np.float32)

default_outs_buffer_2 = np.linspace(0.0, 10.0, 64).astype(np.int32)

default_outs_buffer_1 = default_outs_buffer_1.reshape((8, 8))

default_outs_buffer_2 = default_outs_buffer_2.reshape((8, 8))

# bind buffer

outs_buffer_1 = context.buffer(default_outs_buffer_1)

outs_buffer_2 = context.buffer(default_outs_buffer_2)

outs_buffer_1.bind_to_storage_buffer(1)

outs_buffer_2.bind_to_storage_buffer(2)

# execute

compute_shader.run()

# read buffer

outs_buffer_1 = outs_buffer_1.read()

outs_buffer_2 = outs_buffer_2.read()

# format read buffer

data_1 = np.frombuffer(outs_buffer_1, dtype=np.float32)

data_1 = data_1.reshape((8, 8))

data_2 = np.frombuffer(outs_buffer_2, dtype=np.int32)

data_2 = data_2.reshape((8, 8))

# out

print(data_1)

print(data_2)

# OUTPUTS:

# data_1

[[ 0. 2. 4. 6. 8. 10. 12. 14.]

[ 16. 18. 20. 22. 24. 26. 28. 30.]

[ 32. 34. 36. 38. 40. 42. 44. 46.]

[ 48. 50. 52. 54. 56. 58. 60. 62.]

[ 64. 66. 68. 70. 72. 74. 76. 78.]

[ 80. 82. 84. 86. 88. 90. 92. 94.]

[ 96. 98. 100. 102. 104. 106. 108. 110.]

[112. 114. 116. 118. 120. 122. 124. 126.]]

# data_2

[[ 0 1 2 3 4 5 6 7]

[ 8 9 10 11 12 13 14 15]

[16 17 18 19 20 21 22 23]

[24 25 26 27 28 29 30 31]

[32 33 34 35 36 37 38 39]

[40 41 42 43 44 45 46 47]

[48 49 50 51 52 53 54 55]

[56 57 58 59 60 61 62 63]]